Introduction to the Challenge

In 2024, the WARA Robotics Mobile Manipulation Challenge took place. The primary objective of the challenge was to invigorate research activities in robotics among partner sites at WASP universities. Focused on the intricate domain of mobile manipulation, the challenge posed a compelling task that necessitates innovative

solutions. By formulating a specific challenge, we aimed to inspire research teams to devise ingenious and novel approaches to address the complexities associated with mobile manipulation.

The challenge was adapted from a use-case from AstraZeneca in the lab automation domain. The task was to localize carts loaded with glassware that needs washing, transport these to a dishwasher room and load the dishwasher with the glassware. We can see the motivation from the challenge description:

The envisioned scenario for the WARA Robotics challenge is grounded in the realm of lab automation, motivated by the challenges prevalent in scientific research, particularly within various biomedical domains. Despite advancements in research methodologies, many bio-medical tasks continue to rely heavily on manual labor. The challenge addresses a specific but essential sub-task persistently performed by lab assistants worldwide: washing glassware. While crucial for ensuring an adequate supply of clean equipment, this task does not demand specialized knowledge, yet consumes valuable time of highly-qualified research personnel.

Thus, the challenge we pose to the WASP community involves the development of a mobile manipulation system capable of partially automating this process. The system must autonomously navigate safely in a human-populated lab environment, localize carts loaded with glassware that needs washing, transport these carts to a designated dishwasher room, and manipulate the glassware for loading into an industrial dishwasher. Successful solutions to this challenge hold the potential to significantly alleviate the labor-intensive nature of lab work, allowing researchers to allocate their expertise to more complex scientific endeavors.

Challenge Description WARA Robotics 2024

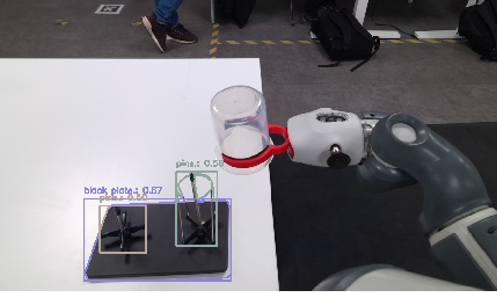

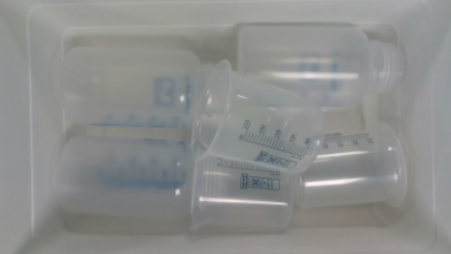

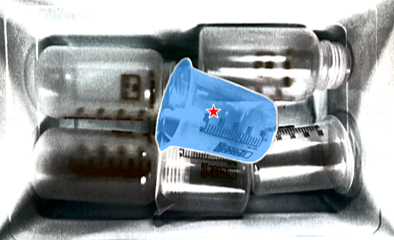

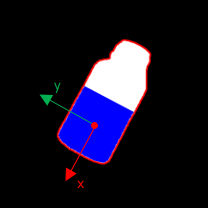

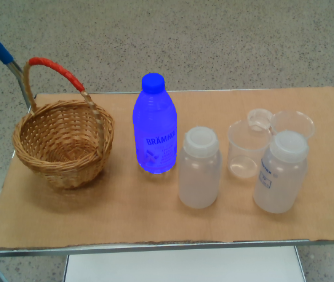

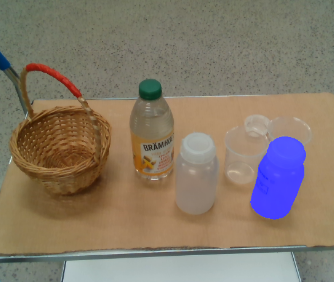

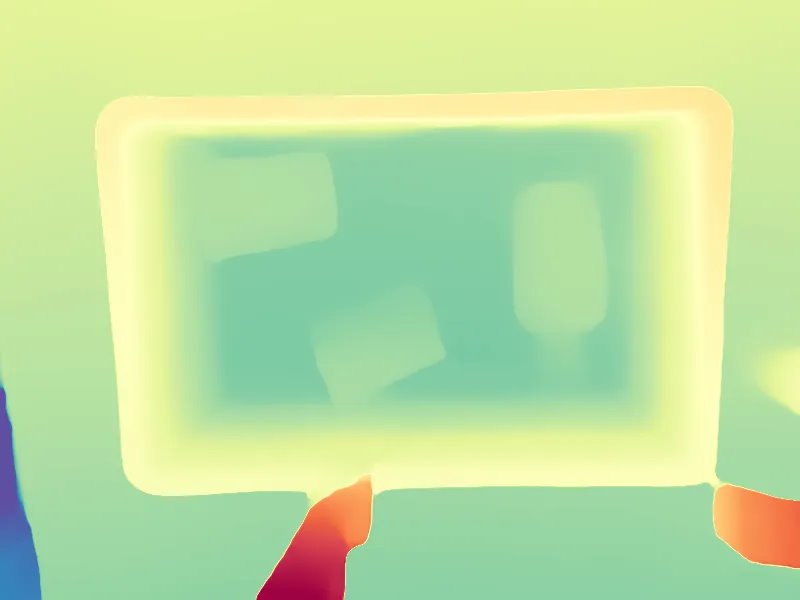

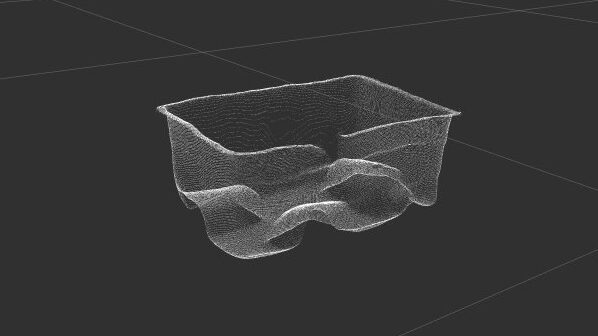

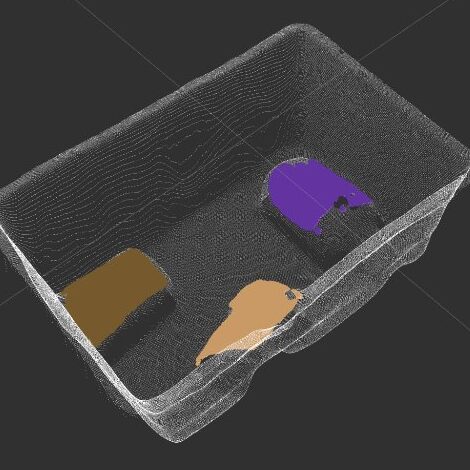

The figures below depict the current state of affairs. As it can be seen, the challenge tackled many research areas such as navigation, full body control, manipulation, bin picking, (transparent) object detection, and task planning.

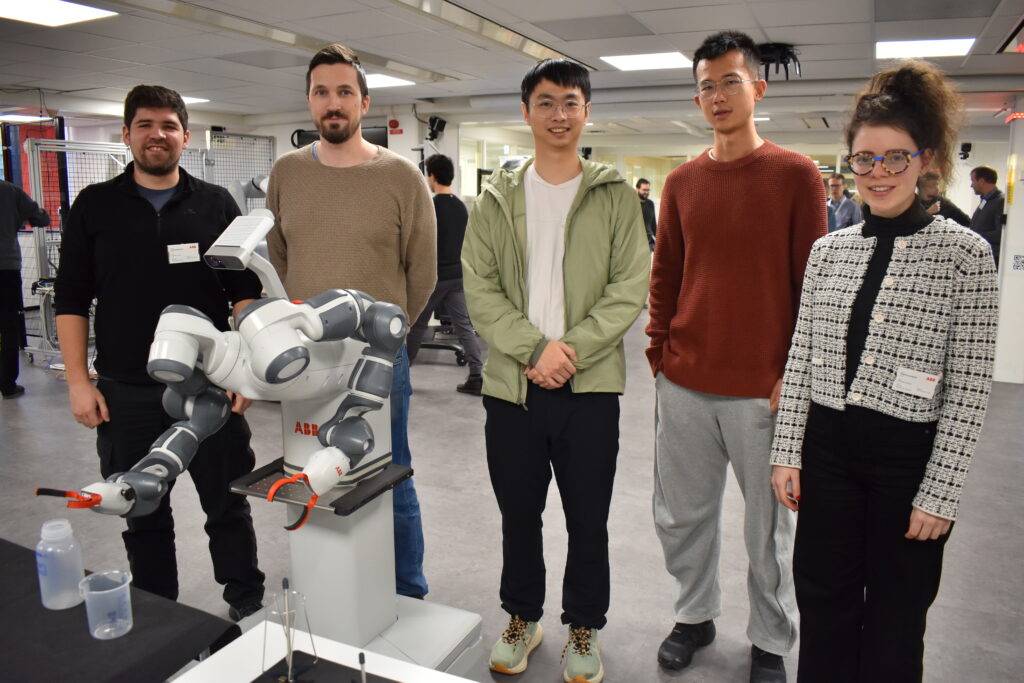

The challenge kicked off in May, with the final demonstration day on the 16th of December. Here, all participating teams had 15 minutes to setup and demonstrate their solution. The winner, picked by a jury of robotics experts and stakeholders, won the prize of going to Automatica 2025. A total of four teams participated to the challenge: Kungliga Tekniska Högskolan (KTH), Politecnico di Milano, Lunds Tekniska Högskolan (LTH), and Örebro Universitet.

The teams’ solutions

KTH

The team from KTH strived to complete the full challenge from cart detection to dishwasher loading. While they unfortunately ran out of time to connect everything together for a robust demo, they showed off solutions to various parts of the pipeline on the demo day.

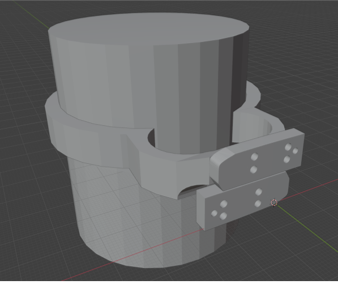

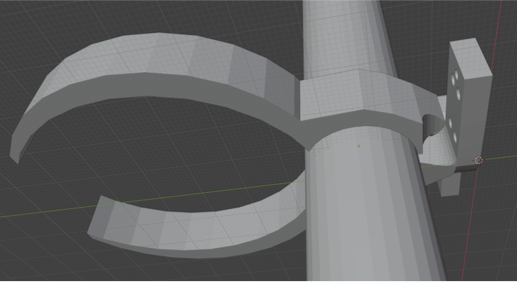

One noticeable part of their solution is the custom gripper design that works on both the subtasks.

Cart detection and grasping: First off, the cart is detected by masking based on HSV color models and spatial filtering. For this, the clear blue handlebar of the cart is used. The mobile YuMi can now approach the cart using visual servoing, after which it can grasp the cart with a given force. Furthermore, the robot stiffness is set based on current-based impedance control.

For the navigation of the cart-pushing, 2D lidar-based SLAM is applied. Via Nav2, the mobile YuMi had access to AMCL localization, collision avoidance, and path planning.

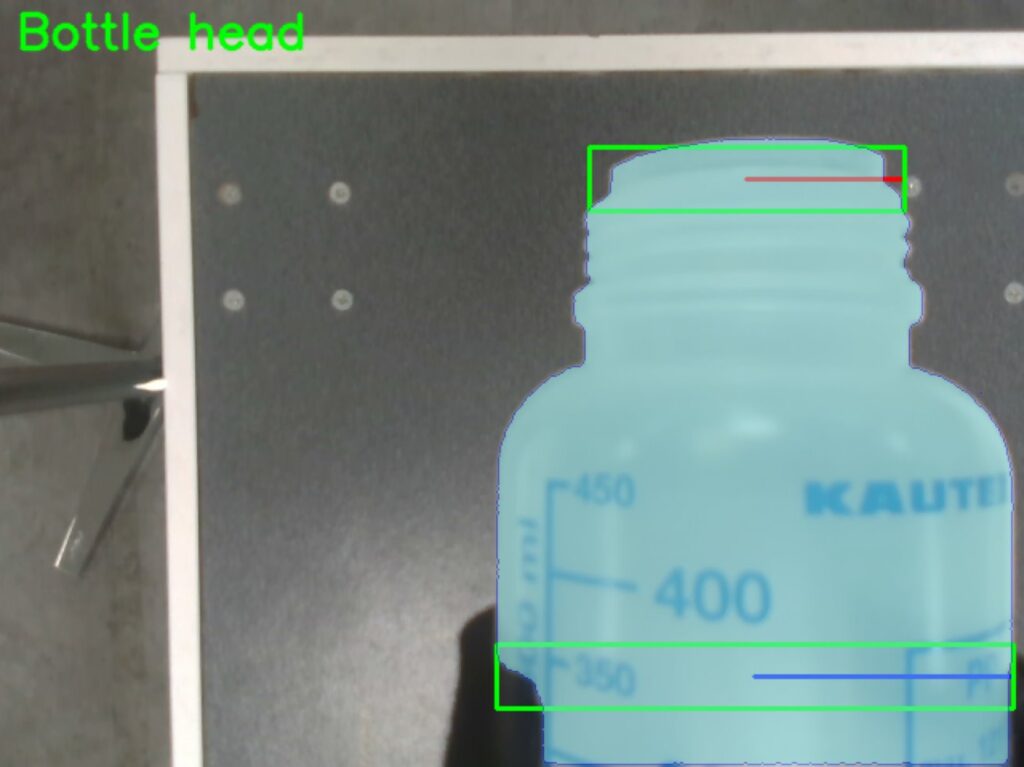

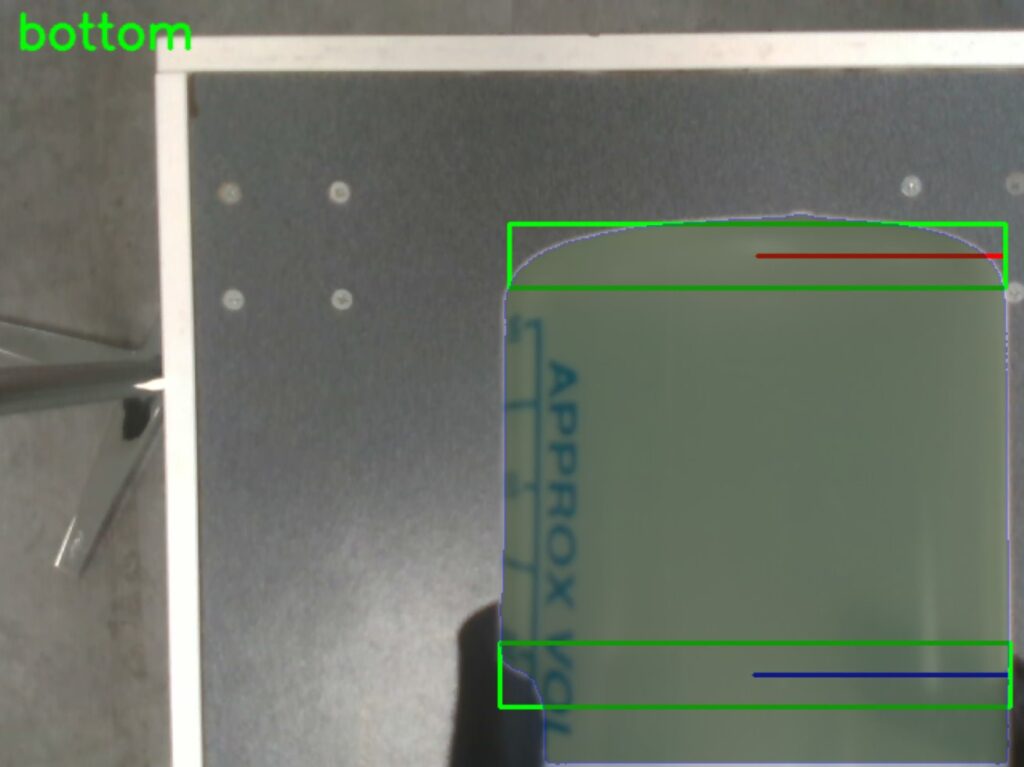

Dishwasher loading: To figure out the position of the cups and bottles, YOLO was used to find bounding boxes. Using the bounding box as well as a depth measurement from the camera, the x and y position can be figured out using the camera projection matrix. The z position can be figured out using plane detection.

For the dishwasher tray, grounding DINO was used for bounding box detection of the plate and pins. The point cloud or highest bounding box can be used to find which pin is the tall one. Using PCA on the point cloud and a convex hull algorithm the corners and pin locations can be determined.

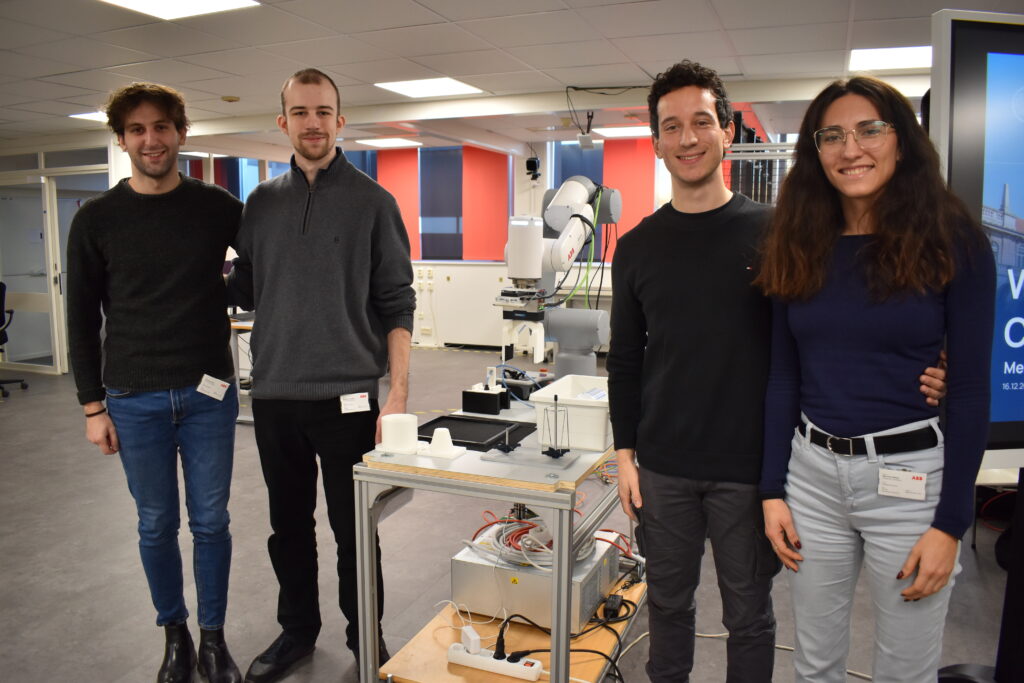

Politecnico di Milano

While the team of Politecnico did not prepare a solution for the cart pushing subtask, they managed to show an impressive demo for the dishwasher loading subtask. They used the GoFa 5 collaborative robot. Their pipeline consisted of three main steps:

- Bin picking: The bin picking was performed using YOLO object detection together with SAM for object segmentation. After ordering the detections by the perceived depth, the top bottle or beaker is the ideal candidate to be picked. The team used a suction cup, which is ideal for glassware which might be fragile if grippers exert too much force on them.

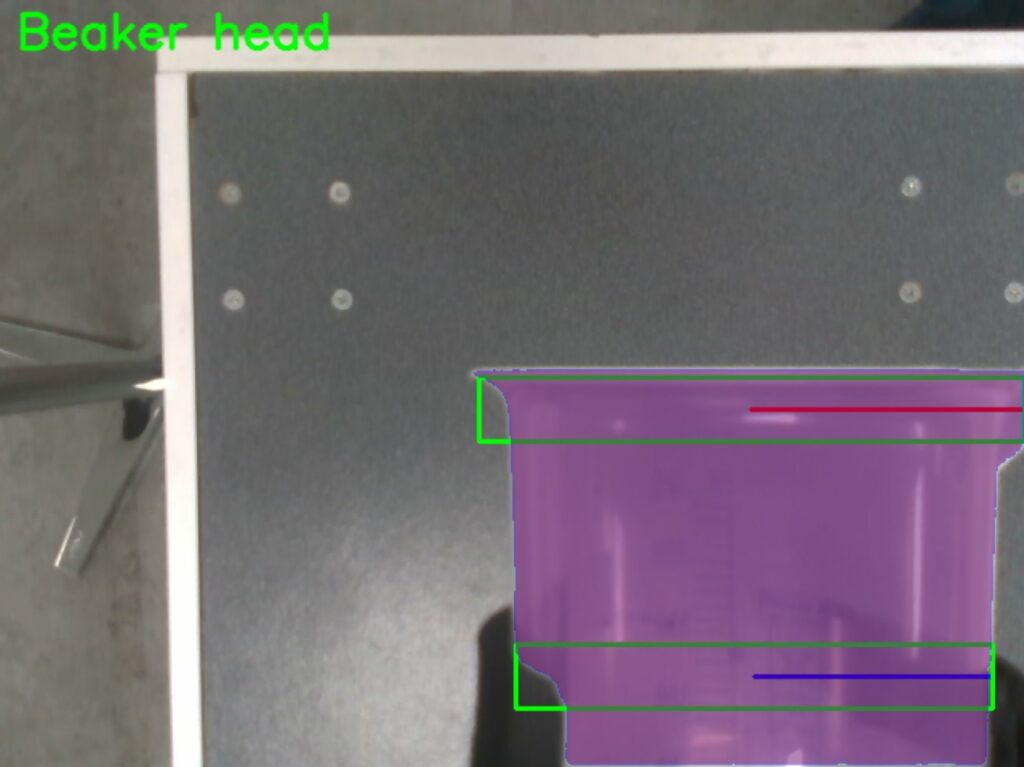

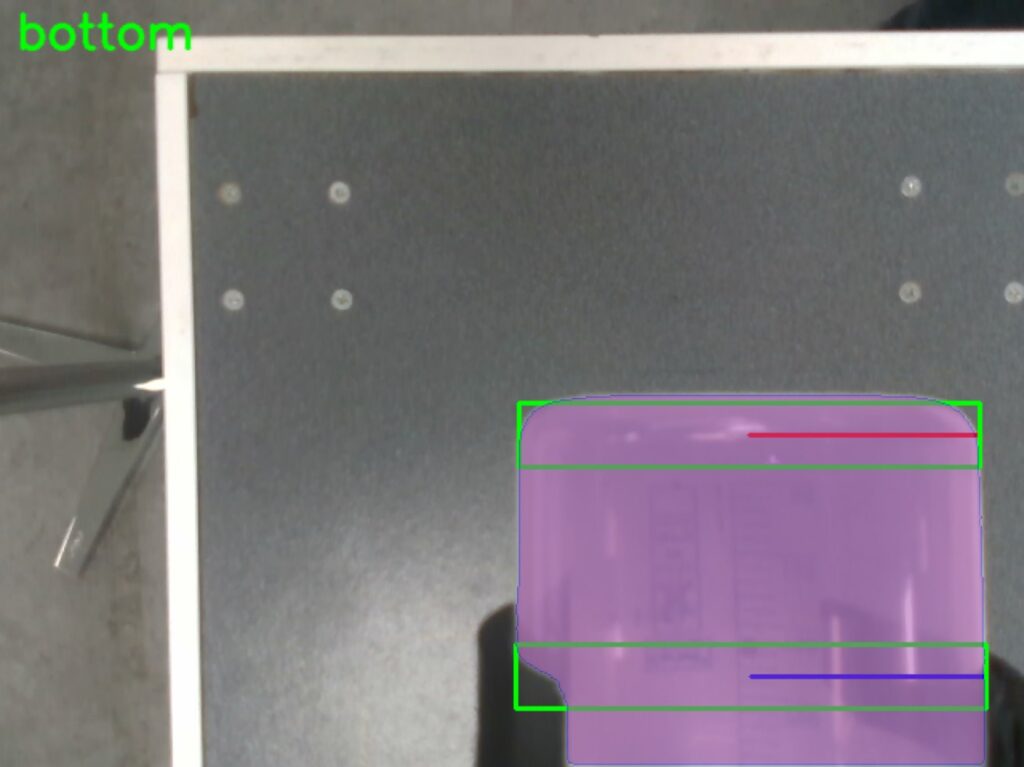

- Regrasping: Using YOLO for detection, and SAM for object segmentation with feature detection, a grasping point can be found along with a trajectory to reposition the bottle or beaker such that it is in a deterministic position for the last stage.

- Loading: Using kinesthetic teaching prior to the execution, the robot knows what trajectory to apply to load the glassware onto the pins. While it might be an overhead to teach the robot the lay-out of all the pins before use, this is only a one-time operation and will result in a very robust and deterministic system (which also makes it more easy to understand).

LTH

While the team from Lund unfortunately was not able to present a physical demo due to integration issues, or potentially too ambitious of a scope, the team showed a quite promising whole-body control approach as well as other technologies.

Cart Pulling: The team decided to pull the cart instead of push it, since this requires less force on the wrist and is easier to model. In order to generate a collision-free path through the lab, with possibly people walking around as well, the team used Starshaped Obstacle Avoidance through Dynamical Systems (SOADS, a work by Albin Dahlin). SOADS model the environment as a dynamical system where a vector field can be applied so that it is always known where to go. The controller needs to make the path followable, but there is a safety tunnel to allow for some deviation of the path.

A path-following Model Predictive Controller (MPC) is used to follow an end-effector and/or base reference, using a Box-FDDP optimal control solver. The closed-loop inverse kinematics are computed using a basic damped pseudoinverse.

Vision: For the dishwasher loading as well as the detection of the cart, a combination of grounding Dino and segment anything is used followed by Random Sample Consensus to get a detection.

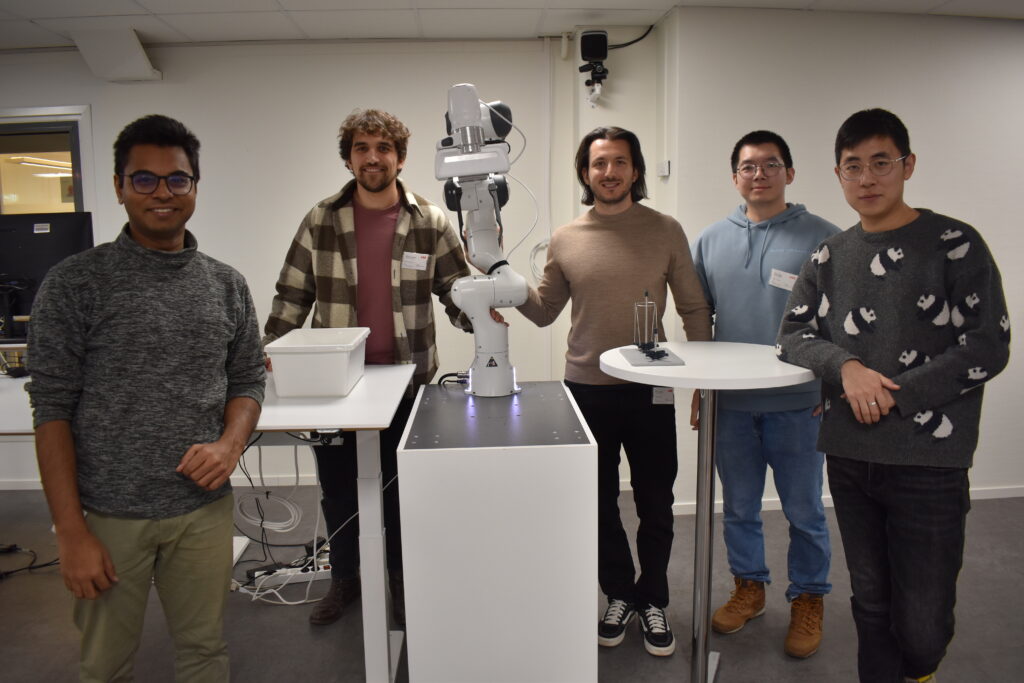

Örebro University

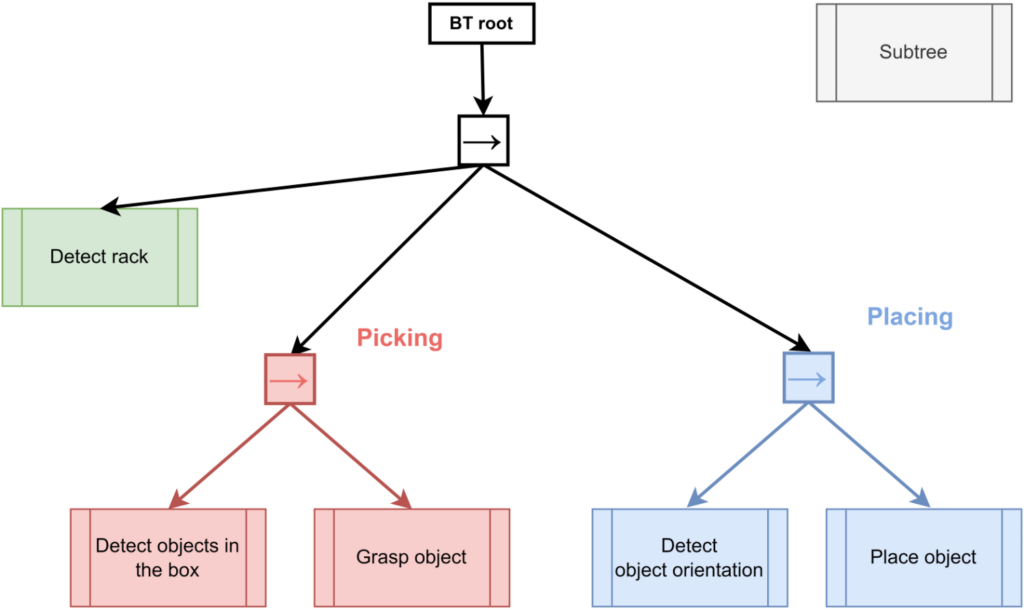

The team from Örebro focused on the dishwasher loading task and showed off a robust and efficient system on the demo day. Their use of a behaviour tree made the routine reactive to things that can go wrong. The tree consisted of three main steps: Detecting the dishwasher rack, picking an item from the bin, and placing the item on the pins in the rack.

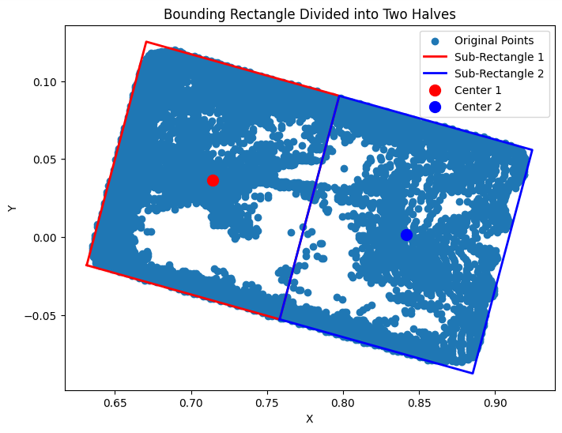

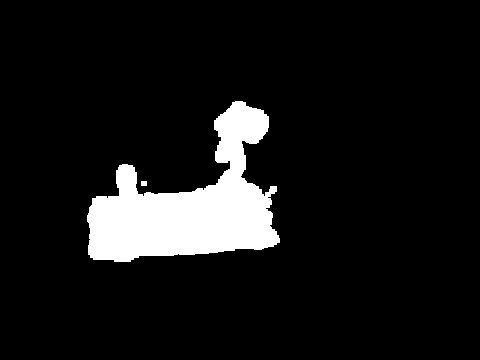

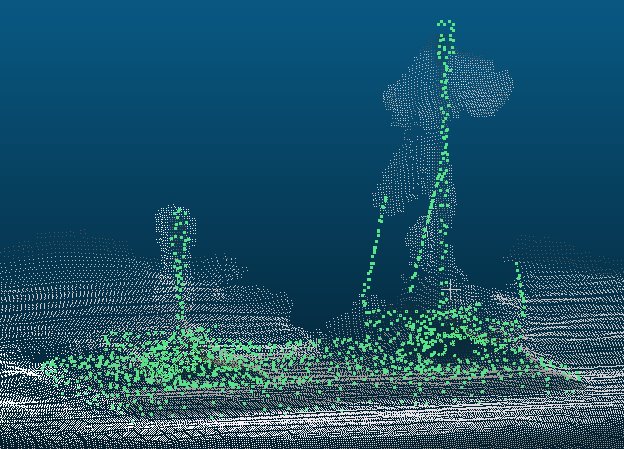

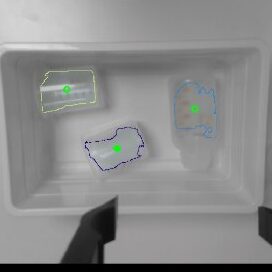

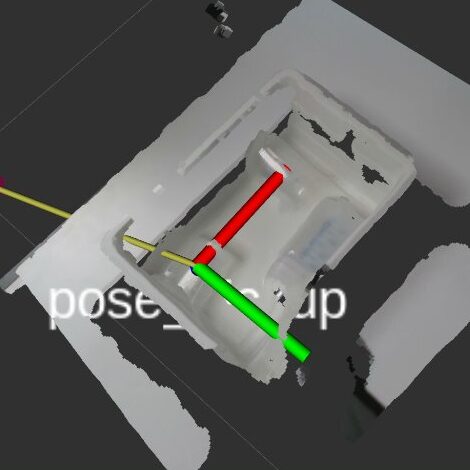

- Detecting the rack: Foundation Pose was used to estimate the pose of the rack. It requires a mask which is taken via point-cloud processing: with plane segmentation the points above the table are taken, and the biggest cluster is assumed to be the rack. Then the points are projected on a 2D plane to get the mask. Furthermore, due to the asymmetry which Foundation Pose might struggle with, the average distances of the points in the cloud to the mesh at the estimated pose is taken for the estimate and a 180 degree rotation.

- Picking an item: In order to pick an item from the box, first the objects need to be detected. This is done using a predicted depth from the MoGe model, which has showed to give a more accurate result than the perceived depth from the real-sense camera. Afterwards, using cylinder fitting, possible matches are ranked based on the number of pixels (higher is better) and aspect ratio (closer to 1 is better). A grasp can then be attempted at the center of the highest ranked cylinder.

- Placing the item: In order to place the item, it needs to be known what object is picked. This is done by checking at which width the gripper is, since the objects have a different width. Furthermore, it is important to know the orientation of the object. In order to figure this out, the camera takes an image during the transportation. The image is segmented with SAM2 in order to do some feature detection. In case the detection goes wrong, the behaviour tree can still recover the placement when an unexpected force is detected upon placement.

Conclusion

After a weekend hackathon of last-minute integration and improvement, it was time for the challenge day. In a packed lab here at ABB Corporate Research Center in Västerås, the teams presented the technical details of their solutions and gave a one-shot demo. While all teams showed very interesting approaches and impressive demonstrations, there could of course be only one winner. After tough discussions within the jury – it was a very close call – the team of Örebro University came out as the winner. Congratulations!

The teams voiced that they have been happy participating in the challenge, as it gave them a good use-case and interesting papers to write. Furthermore, we will probably see more of them in the WARA as they said they had a very positive experience regarding available hardware and engineering support. In general WARA Robotics has been able to show that it is a great platform to push students to work on industry relevant use-cases and to increase visibility and networking which enhances collaboration.

Do you think you have a good solution – for this challenge or any other use-cases – you would like to try out on hardware? Please don’t hesitate to contact us! We would like to thank all the teams for participating and AstraZeneca for hosting this challenge with us. Once again congratulations to the team from Örebro University, and we are looking forward to hosting more challenges in the coming years!